StrokeGAN Painter: Learning to Paint Artworks Using Stroke-Style Generative Adversarial Networks

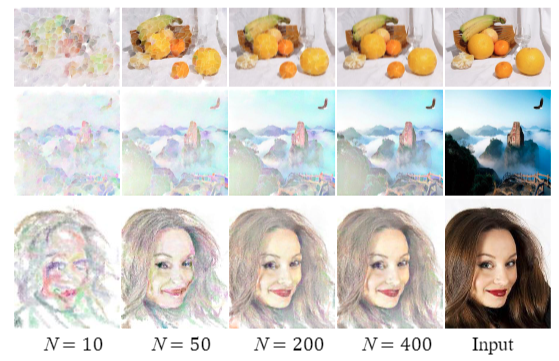

It is a challenging task to teach machines or computers to paint like human artists in a stroke-by-stroke manner. Despite recent advances in stroke-based image rendering and deep learning-based image rendering, the existing painting methods have limitations: 1) lacking the flexibility to choose different strokes to paint, 2) losing content details of images, and 3) generating few styles of paintings. In this paper, we propose a Stroke-Style Generative Adversarial Network (namely StrokeGAN) to solve the first two limitations. Our StrokeGAN learns styles of strokes from different stroke-style datasets and produces diverse stroke styles with adjustable stroke shapes, size, transparency, and color. We design three players in our StrokeGAN to generate pure-color strokes close to human artists’ stroke, thereby improving the quality of painting details. Regarding limitation 3, based on our StrokeGAN, we then devise a neural network named StrokeGAN Painter that generates different styles of paintings (such as oil paintings, pastel paintings, and watercolor paintings). Experiments demonstrate that our artful painter generates different styles of paintings while well-preserving content details (such as human face texture and details of buildings) and retaining a higher similarity to the given images. User studies from participants covering various backgrounds, ages, and genders demonstrate that the paintings generated by our painter win the most votes with 76.9\% of votes in pastel paintings and 82.5% of votes in oil paintings on average against the state-of-the-art methods in terms of fidelity and stylization.