Edge-Based Communication Optimization for Distributed Federated Learning

Abstract

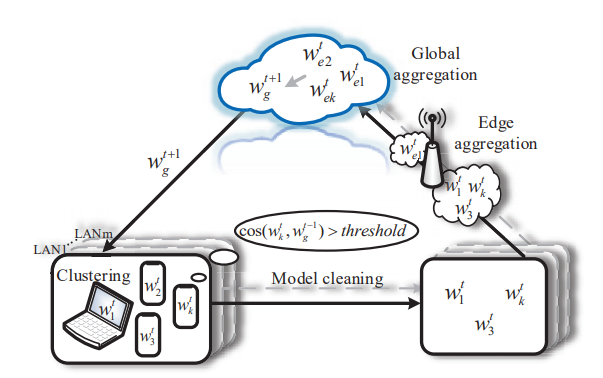

Federated learning can achieve the purpose of distributed machine learning without sharing privacy and sensitive data of end devices. However, high concurrent access to the server increases the transmission delay of model updates, and the local model may be an unnecessary model with the opposite gradient from the global model, thus incurring a large number of additional communication costs. To this end, we study a framework of edge-based communication optimization to reduce the number of end devices directly connected to the server while avoiding uploading unnecessary local updates. Specifically, we cluster devices in the same network location and deploy mobile edge nodes in different network locations to serve as hubs for cloud and end devices communications, thereby avoiding the latency associated with high server concurrency. Meanwhile, we propose a model cleaning method based on cosine similarity. If the value of similarity is less than a preset threshold, the local update will not be uploaded to the mobile edge nodes, thus avoid unnecessary communication. Experimental results show that compared with traditional federated learning, the proposed scheme reduces the number of local updates by 60%, and accelerates the convergence speed of the regression model by 10.3%.

Bibtex

@ARTICLE{9446648,

author={Wang, Tian and Liu, Yan and Zheng, Xi and Dai, Hong-Ning and Jia, Weijia and Xie, Mande},

journal={IEEE Transactions on Network Science and Engineering},

title={Edge-Based Communication Optimization for Distributed Federated Learning},

year={2021},

volume={},

number={},

pages={1-1},

doi={10.1109/TNSE.2021.3083263}

}

Leave a Reply